During my time at the Le Wagon Data Science bootcamp, I participated in an exciting and intense 9-week journey to deepen my understanding of data science. The final two weeks were dedicated to a group project, where my team and I had the opportunity to apply everything we had learned. Our project, Hands Up, was a challenging yet incredibly rewarding experience that focused on a meaningful real-world problem: translating sign language into text using deep learning.

The Problem We Set Out to Solve

Sign language is a critical tool for communication in the deaf and hard-of-hearing communities, but learning it can be a challenge for hearing individuals. Many existing apps that aim to help users learn sign language lack interactivity and struggle to recognize signs from video inputs, which reduces their effectiveness.

Our goal with Hands Up was to address this gap by creating a web-based application that can translate videos of people performing sign language into corresponding words using deep learning. This would make learning sign language more accessible and interactive for users, while also supporting those looking to better communicate with their deaf or hard-of-hearing family members and friends.

The Technical Approach

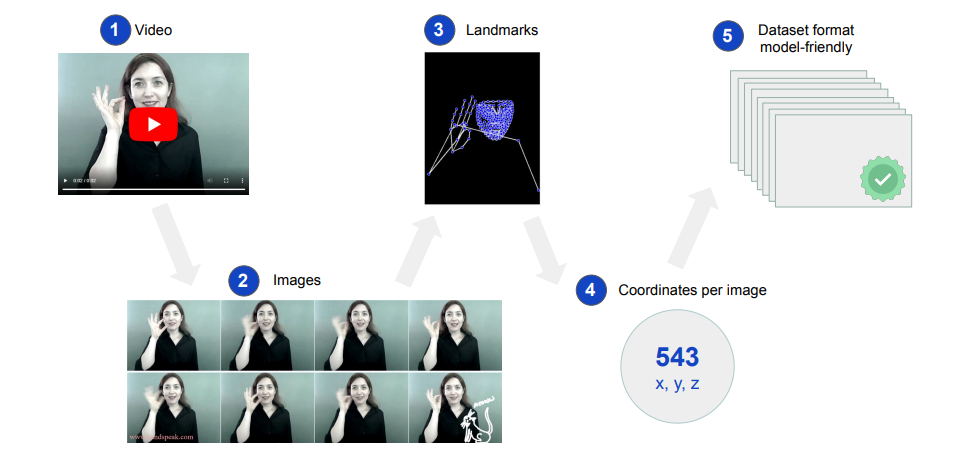

At the heart of the project was a deep learning model capable of translating sign language from video into text. Here’s a breakdown of the key steps we followed to make this happen:

Dataset and Preprocessing: We worked with a large dataset of 95,000 videos, each labeled with a word corresponding to the performed sign. Our first challenge was handling this massive dataset, which weighed in at 56 GB. Each video had a unique length and quality, so our first task was to preprocess the data to standardize it for our model.

Extracting Landmarks: To recognize the sign in each video, we used the Holistic MediaPipe API, which detects the positions of key points (landmarks) on the hands, face, and body. These landmarks were then converted into numerical data, giving us a sequence of coordinates for each video. This transformation allowed us to work with the videos as structured tables of data rather than raw images.

Model Training: The challenge of training the model lay in ensuring that it could accurately interpret signs, even with the variability in video quality and length. We trained the model to map the landmarks extracted from the videos to their respective labels, such as “cat” or “hello.” Preprocessing played a crucial role in making the data compatible with the model’s input format.

Building the Application: For the frontend, we used Streamlit to create a user-friendly interface where anyone could upload an MP4 video of a sign and receive its translation in text. We packaged everything using Docker to make the system easily deployable in the cloud.

The Results

Once trained, we evaluated our model on 9,000 test videos. The model achieved 65% accuracy, meaning that for 65 out of 100 videos, it correctly translated the sign into the intended word. While this wasn’t perfect, it was a result we were proud of. Interestingly, when the model made errors, the predicted words often had a similar sign to the correct word, indicating that it was learning the nuances of sign language effectively.

What I Learned

This project was an eye-opener for me, not just technically, but also in terms of working on a socially impactful solution. Here are some key takeaways:

Handling Large Datasets: One of the biggest challenges was managing the 95,000 videos. We had to optimize our workflows for processing this large dataset efficiently, which involved learning advanced data preprocessing techniques and managing computational resources effectively.

Deep Learning for Video: Prior to this, my work had primarily been with static datasets. Working with video data introduced new complexities—dealing with varying video lengths, extracting meaningful patterns from a sequence of images, and ensuring that the model could handle real-world data variability.

Sign Language Complexity: Diving into the world of sign language gave me a new appreciation for its richness and complexity. The same word can be signed in multiple ways depending on the context, region, or signer. This made the task even more challenging, but it also reinforced the potential impact that a tool like Hands Up could have.

Teamwork and Collaboration: The project was highly collaborative, and I was lucky to work with a motivated and talented team. Each of us brought different strengths to the table, from frontend development with Streamlit to model training and data processing. The synergy we developed as a team was key to overcoming the various hurdles we faced.

Looking Forward

While we’re proud of the model we built, there’s still a lot of potential for Hands Up. Future improvements could include:

Optimizing for Mobile: Currently, the model runs in the cloud, but to make it more accessible, it would need to be optimized to run efficiently on mobile devices. This would allow users to translate signs on the go, directly from their phones.

Sentence Translation: Our model translates isolated signs, but a next step could be extending it to handle full sentences or conversations in sign language. This would make the application much more versatile and useful for real-world communication.

Final Thoughts

Hands Up was an incredibly rewarding project that allowed me to apply my machine learning knowledge to a meaningful problem. From working with massive datasets to fine-tuning a deep learning model, the experience taught me invaluable lessons about both the technical and human aspects of problem-solving. I’m excited to see how this project could evolve and hope to continue working on innovative solutions that make a difference.

A big thanks to my amazing teammates—Hellena, Laura, and Alex—and our Le Wagon instructors for their guidance and support throughout this journey.